The word “consistency” is widely used in IT nowadays, especially regarding databases, but what does that really mean? Let’s find out!

Formal definition

In database systems, consistency refers to the requirement that any given database transaction must change affected data only in allowed ways.

Wikipedia (https://en.wikipedia.org/wiki/Consistency_(database_systems))

So basically this concept describes that if you have specific rules about your data (invariants), then every database transaction should preserve those rules – e.g. in a cinema ticket booking system, by creating every successful booking, the number of available tickets should be decremented, but can not drop below zero. Suppose a database transaction starts with valid data according to these rules (invariants) so any changes during the transaction should preserve the validity. Seems to be straightforward, right? Nevertheless, there are a few super confusing, but extremely important acronyms that contain “C” (as consistency) but have very little in common with the formal statement above.

A C I D

The first, but probably the biggest (and oldest) acronym is ACID, which refers to properties of database transactions:

- A – Atomicity

- C – Consistency

- I – Isolation

- D – Durability

I would rather change that to realistic AI (atomic and isolation), but let’s keep the Durability concept for the next post and focus on C (consistency) here.

So due to Wiki –

Consistency in ACID transactions ensures that a transaction can only bring the database from one consistent state to another, preserving database invariants

The problem with that statement lies in the fact, that the idea of consistency is mostly related to application business logic. That’s the application code’s responsibility to correctly construct the database transaction to preserve invariants. Basically, the database is unable to guarantee consistency (yes, there are some very simple database constraint checks like uniqueness), so if you write bad data (e.g. create a cinema booking but will not reduce the number of available seats), the database will not hold you back! That is up to the application what data is valid, and what is not. So the “C” letter should not belong to the “ACID” acronym.

CL A P

Another example of a misleading acronym is CAP, which refers to a theorem about guarantees in distributed data stores:

- C – Consistency

- A – Availability

- P – Partition tolerance

Yet another example of too many letters in the acronym, as in fact, the network partition is a part of the problem, that will happen without your allowance whether you like it or not. So it rather should be something like “Choose Consistency or Availability in case of Partition”.

So due to Wiki –

Consistency in CAP theorem means that every read receives the most recent write or an error.

That description directly references the concept of Linearizability.

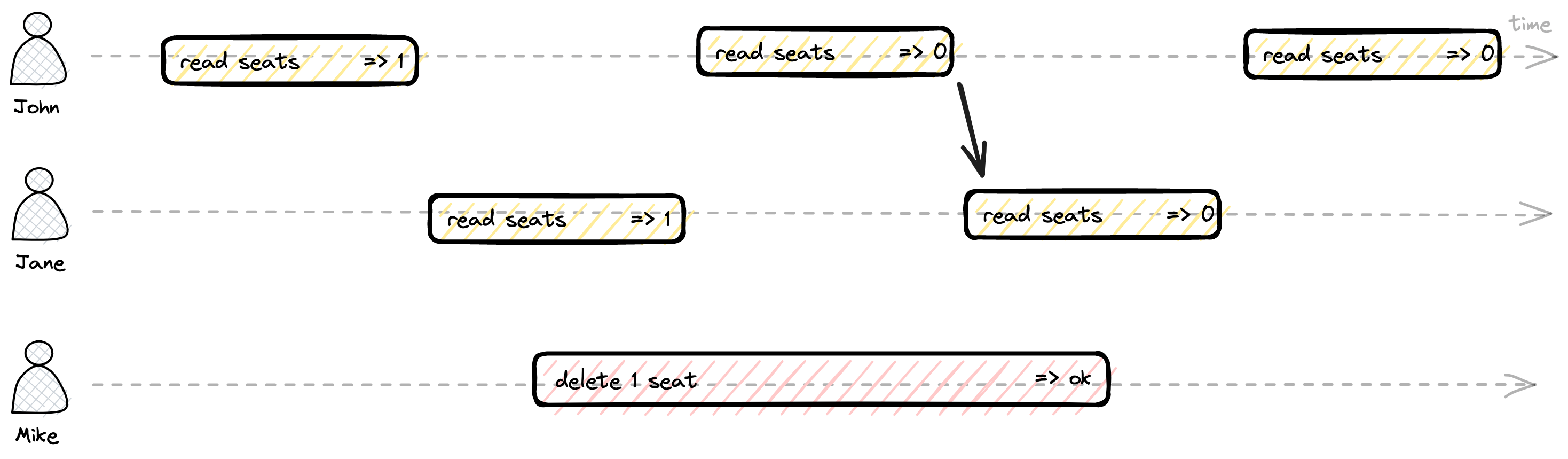

The strict definition of linearizability is quite fuzzy, but the basic idea is that as soon as one client successfully completes a write operation, all clients reading from the database should be able to see the value just written as it was only a single register (even if reality there are multiple replicas/nodes/partitions).

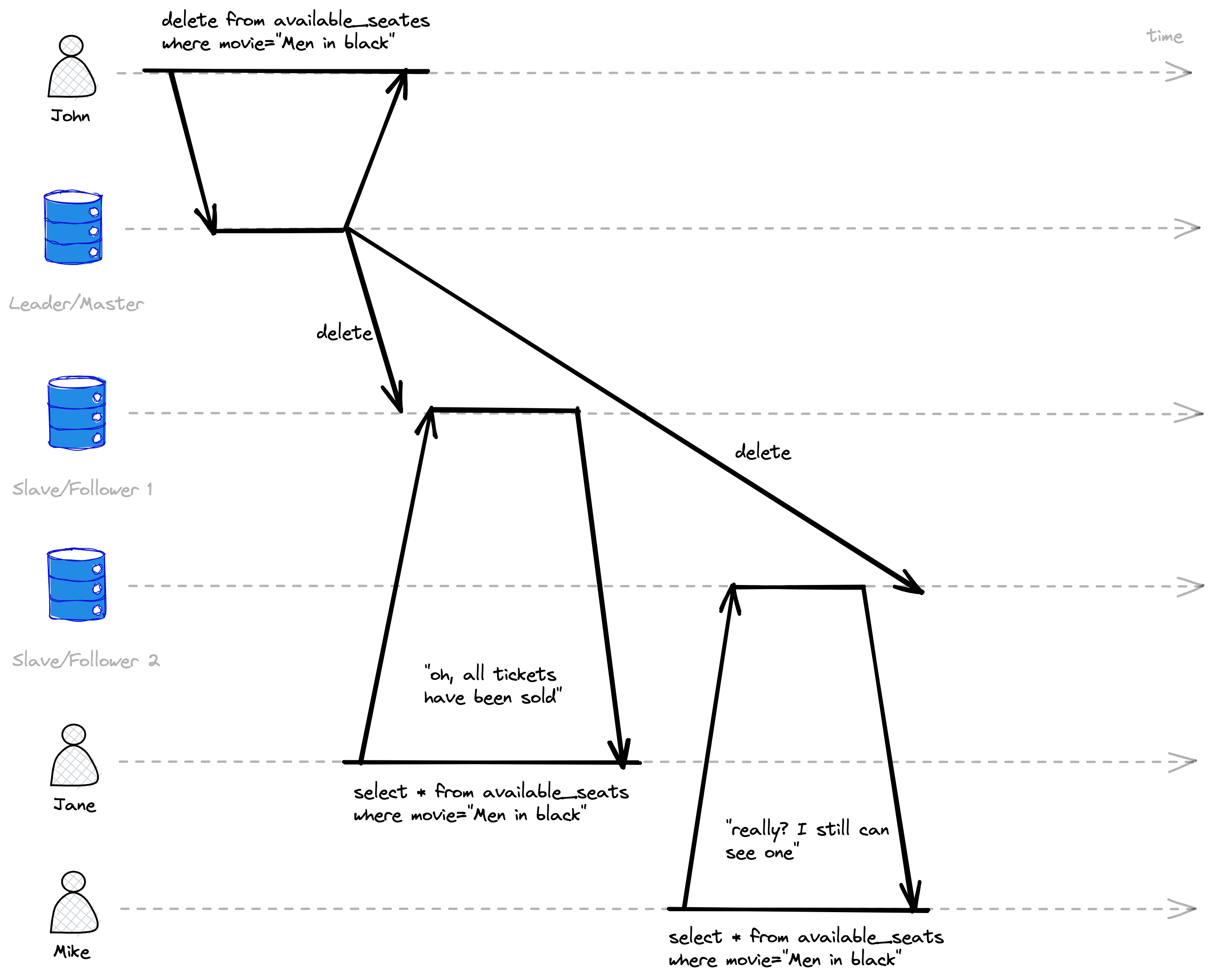

So after John has booked the last seat for the “Men in Black” movie, both Jane and Mike tried to check availability, but both of them saw different results, due to async replication and network delay during the master db change population.

In the example above, as there is a point in time when Mike’s delete change became visible to John’s read operation, after that all readers should see the same version as John.

Other consistency examples

The word consistency is terribly overloaded across the whole broad IT domains, apart from the example above, you also can find that word in such areas:

- Consistent hashing is an approach to partitioning and rebalancing

- Eventual consistency model – an issue in terms of linearizability, but a way to achieve high availability

Final thoughts

That article was an attempt to underline the problem of people using just the word “consistency” without a clear statement about what exactly they mean, which actually introduces inconsistency into the discussion 🙂

If you are interested to know more about described above, and other faces of consistency, I highly recommend you the book “Designing Data-Intensive Applications” by Martin Kleppmann.