Recently I’ve faced a task to dockerize legacy applications written in ASP.NET Core. Developers use IIS for the local development environment, but when a new teammate has to install the local environment, it takes ~8 hours to do all mumbo-jumbo to make everything works. So we decided to move the legacy application to docker, at least for the local development purpose at the beginning (aka “A Journey of a Thousand Miles Begins with a Single Step”).

Before I start, it is worth to mention, that I struggled to find a bit amount of info about containerizing ASP .Net Framework apps in Windows Containers. Almost all of the threads I’ve found were related to Linux Containers and .Net Core. So I’ve decided to share my own experience with such a task.

So the requirements after the dockerization were:

- The application should still be hosted in IIS, due to internal app configuration

- Changes in code files should be visible in the application with additional actions (except local build if needed)

- Code should be debuggable

- Deployed apps in containers should have custom hostnames

- “One command run” process (instead of 8 hours of configuration)

- Some apps use legacy AngularJS framework, with bower, etc. So Node Js should be available to use in containers

- The Application should work 🙂

As a base, I’m going to use mcr.microsoft.com/windows/servercore/iis image, it is lighter than mcr.microsoft.com/dotnet/framework/aspnet image, so as smaller as better.

The code below downloads the nodejs distributive archive, next save it in image storage, unarchive to the folder and add this folder to the PATH variable. It will allow using node and npm command in the command line.. The last thing is the cleanup of the downloaded zip archive.

ADD https://nodejs.org/dist/v12.4.0/node-v12.4.0-win-x64.zip /nodejs.zip

RUN powershell -command Expand-Archive nodejs.zip -DestinationPath C:\;

RUN powershell Rename-Item "C:\\node-v12.4.0-win-x64" c:\nodejs

RUN SETX PATH C:\nodejs

RUN del nodejs.zip

Next part of the code does the same, but here RemoteTools for Visual Studio is downloaded and installed, we will need it later for debugging.

ADD https://aka.ms/vs/16/release/RemoteTools.amd64ret.enu.exe /VS_RemoteTools.exe

RUN VS_RemoteTools.exe /install /quiet /norestart

RUN del VS_RemoteTools.exe

Now, as IIS should be used as a hosted server, we need to remove default content from inetpub\wwwroot folder. Later we will use this folder for own code.

RUN powershell -command Remove-Item -Recurse C:\inetpub\wwwroot\*

To be able to use ASP.NET application in IIS, we have to install Windows Feature by:

RUN powershell -command Install-WindowsFeature NET-Framework-45-ASPNET

RUN powershell -command Install-WindowsFeature Web-Asp-Net45

In order IIS to use content of inetpub\wwwroot folder, it is necessarry to add permission for access files. As we are using docker for development purposes and it is isolated, it is OK to grant everyone to access files by command:

RUN icacls "c:/inetpub/wwwroot" /grant "Everyone:(OI)(CI)M"

Now it is crucial to tell docker about our context:

WORKDIR /inetpub/wwwrootLast, but not least, we have to run msvsmon.exe tool to allow remote debugging from Visual Studio. It is important to use as the lowest access restrictions as possible, just to omit to add exceptions to a firewall, auth issues, etc. (but remember that it is not enough for any kind of public access deployment option)

ENTRYPOINT ["C:\\Program Files\\Microsoft Visual Studio 16.0\\Common7\\IDE\\Remote Debugger\\x64\\msvsmon.exe", "/noauth", "/anyuser", "/silent", "/nostatus", "/noclrwarn", "/nosecuritywarn", "/nofirewallwarn", "/nowowwarn"]The whole Dockerfile:

FROM mcr.microsoft.com/windows/servercore/iis

ADD https://nodejs.org/dist/v12.4.0/node-v12.4.0-win-x64.zip /nodejs.zip

RUN powershell -command Expand-Archive nodejs.zip -DestinationPath C:\;

RUN powershell Rename-Item "C:\\node-v12.4.0-win-x64" c:\nodejs

RUN SETX PATH C:\nodejs

RUN del nodejs.zip

ADD https://aka.ms/vs/16/release/RemoteTools.amd64ret.enu.exe /VS_RemoteTools.exe

RUN VS_RemoteTools.exe /install /quiet /norestart

RUN del VS_RemoteTools.exe

RUN powershell -command Remove-Item -Recurse C:\inetpub\wwwroot\*

RUN powershell -command Install-WindowsFeature NET-Framework-45-ASPNET

RUN powershell -command Install-WindowsFeature Web-Asp-Net45

WORKDIR /inetpub/wwwroot

RUN icacls "c:/inetpub/wwwroot" /grant "Everyone:(OI)(CI)M"

ENTRYPOINT ["C:\\Program Files\\Microsoft Visual Studio 16.0\\Common7\\IDE\\Remote Debugger\\x64\\msvsmon.exe", "/noauth", "/anyuser", "/silent", "/nostatus", "/noclrwarn", "/nosecuritywarn", "/nofirewallwarn", "/nowowwarn"]Obviously, we could run it file with docker run command, but let’s imagine we also need a create a database container for our application, so to fulfill the requirement of “One Command Run” I’ve created a docker-compose file

version: "3.8"

services:

app:

build: .

image: samp.compose:latest

ports:

- "83:80"

- "84:443"

networks:

app-network:

ipv4_address: 10.5.0.5

depends_on:

- db

hostname: samp

db:

image: microsoft/mssql-server-windows-express

networks:

- app-network

ports:

- "1433:1433"

environment:

- sa_password=test123

- ACCEPT_EULA=Y

hostname: dockerdb

networks:

app-network:

driver: nat

ipam:

config:

- subnet: 10.5.0.0/16

gateway: 10.5.0.1As you can see, docker-compose file contains 2 services and 1 network, almost nothing special. But there are 2 things are worth to highlight:

- Creating a network with an explicitly specified gateway and subnet. It will allow setting a predefined IP address for our containers (we will later use it for mapping to host). If you will not do that, then every time a container will be created, the new IP address will be automatically assigned from a pool of available IPs.

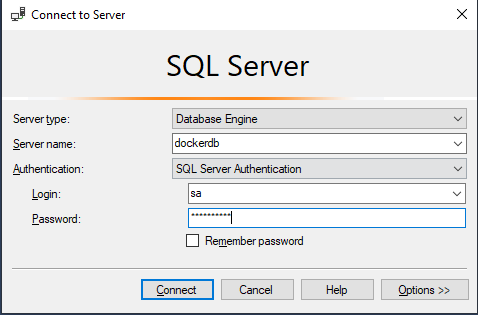

- Using hostname in the db service. This allows us to use the hostname in the connection string outside of the container and app-network. For example, now it is possible to connect to this SQL server from your Windows machine using SSMS using the hostname as a Server name:

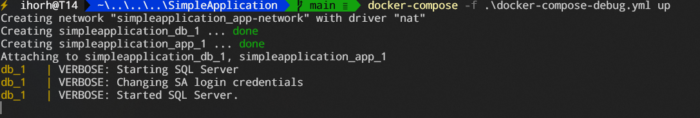

So now we could run our application just by command docker-compose up

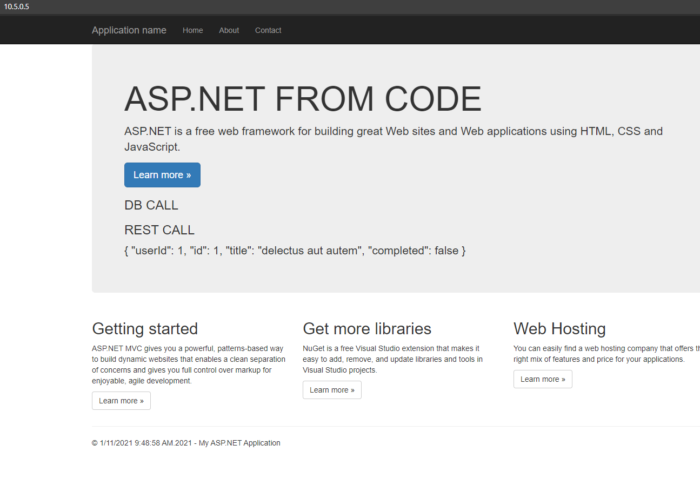

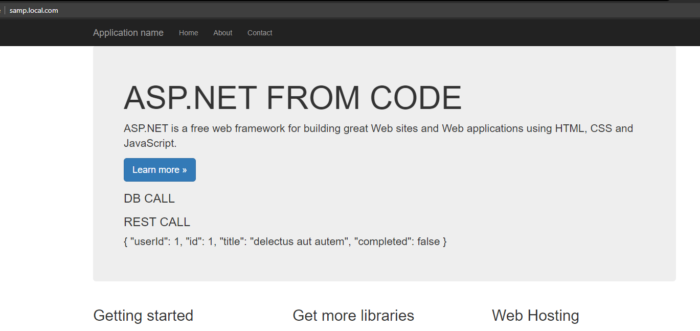

Now just open the browser and put the IP from the docker-compose file (in my case 10.5.0.5) of app service and you should :

Now you could change your code and rebuild (if needed) the project on local machine to see changes on website.

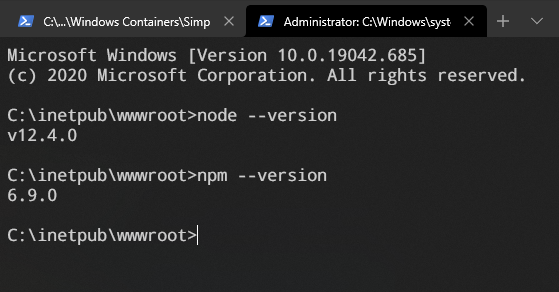

Let’s check if our project has a nodejs installed (which potentially could be needed for some frontned build actions).

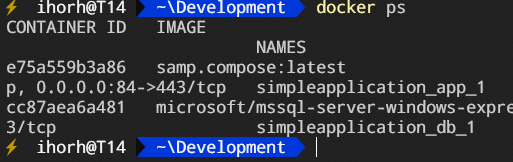

To do that, put the command docker ps and copy the container id of the app container:

Now put the command docker exec -it {YOUR CONTAINER ID} cmd. This command will connect to the container and run the cmd command. Now let’s just put node –version to check if nodejs is installed.

Node version is displayed, so now we could use it (together with npm) for further development

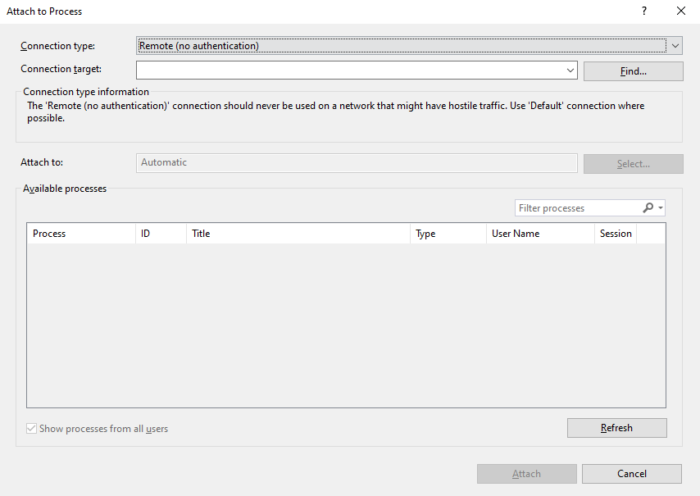

The next thing is debugging, as you remember we’ve added execution of msvsmon.exe as an entry point for our container. It allows us to use Visual Studio Remote Debugging. For that, just click Ctrl + Alt + P and select Remote (no authentication) and click the Find button:

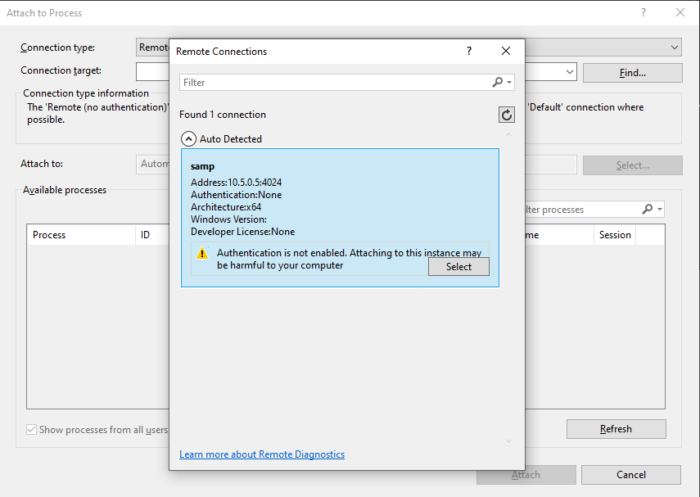

Next select created app from the list:

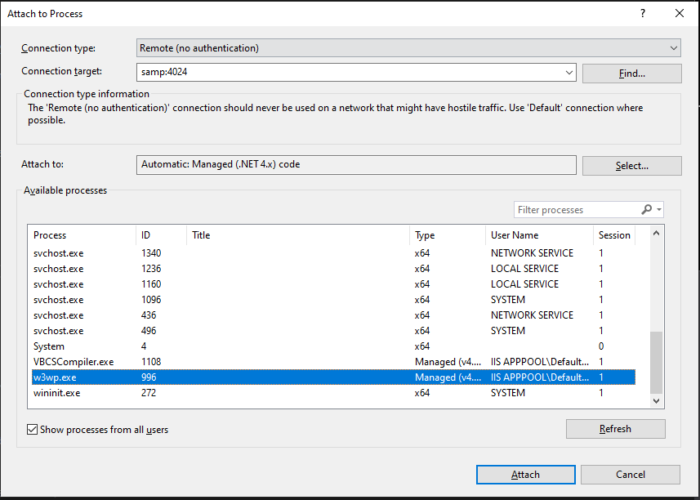

The last thing is to check Show processes for all users and select IIS process w3wp.exe. (You may need to open your website in browser to start the lazy IIS process)

After VS attached to process, it is possible to use the regular debug experience.

The last requirement from the list is that applications in containers should run under hostname. To do that, we have to open C:\Windows\System32\drivers\etc\hosts file (you have to do that with admin rights) and append a line to the end and save the file.

10.5.0.5 samp.local.comWhere 10.5.0.5 is the IP from the docker-compose file, and samp.local.com is your custom hostname.

After that just open the browser (you may have to restart the browser and run ipconfig /flushdns command) and type http://samp.local.com

Link to the full codebase – in GitHub repository. You could find there an additional Dockerfile for the multistage build.

I hope this article could be helpful for people who are trying to migrate the legacy windows applications step by step to containerization.