Well, it’s almost the end of the year and as it has already become a tradition – it’s a good time to look back, analyze this year’s achievements, and create a plan for the next year.

Last year’s retrospective article – Retrospective of 2020. Plans for 2021.

2021’s plans progress

Finish the Leading People and Teams Specialization– 100% (cert link)- Receive Microsoft Certified: Azure Solutions Architect Expert certificate

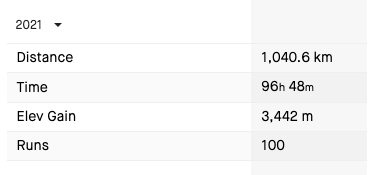

Run at least 100 runs with a minimum of 500km distance and take part in a race– 100%. Due to Strava, I’ve done 100 runs with a total distance of 1040 km.

- Read at least 10 books – 50% (5/10) – my 2021 year from GoodReads.com

- Solve 30 HackerRank problems – 1/30

Learn 1 additional programming language– 100% YAML (due to google it is still programming language xD). I improved my skills in that, because of different DevOps projects.- Post at least 12 new posts in the blog – 4/12

- Contribute to at least one open-source project on Github

- Receive a Professional Scrum Developer certificate

- Get English certificate (IELTS minimum 6.0 or similar) – postponed for 2023/4 due to changed plans and fact that certificate expires 365 days after the test.

Visit at least 2 new countries– 150% (visited 3 new countries I’ve never been to before – Lithuania, Latvia, Estonia)- Hike to Gerlach peak

Sleep at least 1 night in a tent– 300% (as 3 nights have been spent in a tent 🏕)Donate at least 1.5 liter of blood (3 times)– 100% (1.8 liter – 4 times)- Complete First Aid Course

Meaningful work-related change– 100% (changed workplace to a place which gives the satisfaction of solving every day’s customer problems)- Visit a technical conference or meetup (min 2)

Start investing money– 100% (opened a few retirement accounts as well as investing in some stock for shorter-term)Sleep 8+ hours– 85% (do my best to get to bed at 10 PM and wake up at 7 AM at least 5 days a week)Do some crazy adrenaline rush thing (paraglide, glider)– 100% – finished Vienna City Marathon 2021. Nevertheless, the glider is waiting for me in 2022 ;).- Do some gym (at least 1 per week or 52 in a year) – 5/52

Do skying (3 times+)– 100% (2/3)Add at least 2 new board games to the home collection– 150% (+ 3 new board games in our collection)

Summarise:

- 51% (12/23) – Fully completed targets

- 14% (3/23) – Progressed well, but not completed

- 35% (8/23) – Have not been started or progress is negligible

“Out of targets achievements of 2021”

Except for the main plan with the points above, there are a few things I’ve managed to do and am happy to share with you with:

- I’ve run full marathon distance (at Vienna City Marathon 2021 annual race). My target time was 3:50, but reality made my own correctives and after 36’s kilometers I’ve got a painful calf cramp, so last 6 kilometers I most walked rather than ran. The final time was 4:21. Even though, I’m really proud of such achievement, because not the race itself, but rather 3 months of preparation prior to the race day was the most difficult part of a marathon run.

- I’ve passed a test for a motorcycle driver’s license and bought my first bike. As it all happened close to end the of the riding season (in Poland it usually starts in March and ends in November), I hope to fully enjoy that since spring of 2022 🏍

Even though 51% is far away from 100%, at the last year’s post I wrote

So achieving >= 50% of goals might be a reasonable approach.

which means “baseline” has been met. Nevertheless, as we all strive to be better in the “New Year”, I decided to raise the baseline to 60% for the next year. This decision affected my approach of creating a to-do list for next year from “let’s put everything see what happen” to creating more precise targets divided by category.

Plans for 2022

Few unfinished, as well as “permanent” targets, have been moved from last, in addition to that, there are few new points:

Health

- Do 48 gym sessions (~1 per week)🏋️♂️

- Run at least 100 runs with a minimum of 600km distance and take part in a race 🏃♂️

Professional & Personal Development

- Receive AWS Certified Solutions Architect certificate 🔖

- Receive a Professional Scrum Product Owner certificate 🔖

- Solve 48 HackerRank problems (~ 1 per week) 👨💻

- Read at least 8 books 📚

- Learn at least 1 new programming language or fundamental framework 💻

- Have at least 100 “active days” in my GitHub account 👾

Leisure

- Hike to Gerlach peak ⛰

- Do glider flight ✈️

- Visit 1 new country 🏙

- Complete First Aid Course ⛑

- Add at 1 new board game to the home collection 🎲

Miscellaneous

- Post at least 12 new posts in the blog (~ 1 per month) 📄

- Donate at least 1.5 liters of blood (3 times) 🩸

Final thoughts

I believe that at the beginning of 2021 all of us had huge expectations for this year. Most of them were related to “back to normality” and the end of the pandemic life mode. As we know now, it was not the case, but today, undoubtedly, we are more informed and prepared for potential pandemic dangers than in the previous year. In the New 2022 year, we need to keep our hope back to normal life, but except hoping, we have to do our best to reach that target. Despite COVID-19, life is moving forward, let’s don’t just focus on bad news, but support each other, focus on our achievements and do regular workouts!

Happy and “Normal” New 2022 Year!

P.S. There are a few highlight photos from 2021. Unfortunately, not every occasion has a photo in place, but at least a few of them could share the mood of my 2021.