Do you have a backup of your database? Is it stored in safe place? Do you have a plan B if someone will delete your database and backup by accident or intentionally?

This is the list of questions I faced after recent security breach. Let’s imagine a situation when we have a database in cloud and someone accidentally removed that database and all backups/snapshot. Sounds like impossible disaster right? Well, even if it is pretty hard to do accidentally, that doesn’t mean it’s impossible. Or if someone “from outside” will get an access to your cloud prod environment and intentionally remove your database, oh, that sounds more realistic. Well, all we know that due to Moore’s law that question is rather “When?” and not “What if?”.

Regardless of scenario, it is always good plan to have a plan B? So what we can do with that – probably the most obvious scenario is to save the database snapshots in a different place than your database lives. Yes, I think it is a good option. Nevertheless, it is always good to remember that every additional environment, framework or technology will require additional engineering time to support.

Another way is that we can to restrict anyone (regardless of permissions) from removing database snapshots. So even if someone intentionally will want to remove database, it is always a possibility of (relatively) quick restore. Fortunately Amazon S3 bucket provides a possibility to set object lock for S3 bucket. There are 2 retention modes for object lock in AWS S3 service (AWS doc source):

- Governance mode

- Compliance mode

Governance allow to delete objects if you have a special permissions and Compliance mode does not allow to remove this object at all (even for root user) for particular period of time.

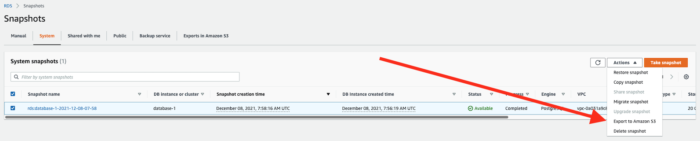

In addition to that, recently AWS introduced possibility to export RDS database snapshots to S3 bucket (AWS doc source). From AWS Console we can easily do that by clicking to Export to Amazon S3 button:

So we can combine Exporting Snapshots and Non-Deletable files to protect database snapshots from deleting.

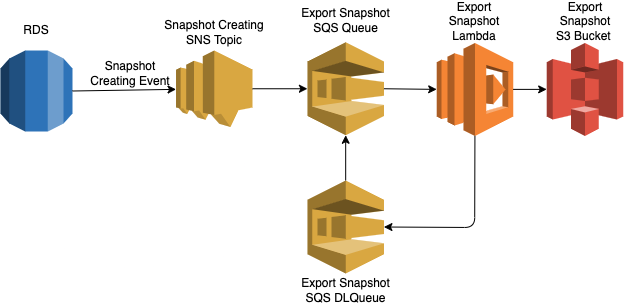

The last thing, is that even if it is pretty simple to do manually through AWS Console, it is always better to have such important process automated, so our database will be safely stored even when we are chilling on the beach during our long waited summer holidays 🏝 . For doing that, we can subscribe to RDS Snapshot creating events and via lambda execution initiate exporting newly created snapshot to non-deletable S3 bucket.

The architecture of that will look like that:

Bellow you could find serverless framework file for creating that infrastructure:

service:

name: rds-s3-exporter

plugins:

- serverless-pseudo-parameters

- serverless-plugin-lambda-dead-letter

- serverless-prune-plugin

provider:

name: aws

runtime: nodejs14.x

timeout: 30

stage: dev${env:USER, env:USERNAME}

region: eu-west-1

deploymentBucket:

name: ${opt:stage, self:custom.default-vars-stage}-deployments

iamRoleStatements:

- Effect: Allow

Action:

- "KMS:GenerateDataKey*"

- "KMS:ReEncrypt*"

- "KMS:GenerateDataKey*"

- "KMS:DescribeKey"

- "KMS:Encrypt"

- "KMS:CreateGrant"

- "KMS:ListGrants"

- "KMS:RevokeGrant"

Resource: "*"

- Effect: Allow

Action:

- "IAM:Passrole"

- "IAM:GetRole"

Resource:

- { Fn::GetAtt: [ snapshotExportTaskRole, Arn ] }

- Effect: Allow

Action:

- ssm:GetParameters

Resource: "*"

- Effect: Allow

Action:

- sqs:SendMessage

- sqs:ReceiveMessage

- sqs:DeleteMessage

- sqs:GetQueueUrl

Resource:

- { Fn::GetAtt: [ rdsS3ExporterQueue, Arn ] }

- { Fn::GetAtt: [ rdsS3ExporterFailedQ, Arn ] }

- Effect: Allow

Action:

- lambda:InvokeFunction

Resource:

- "arn:aws:lambda:#{AWS::Region}:#{AWS::AccountId}:function:${opt:stage}-rds-s3-exporter"

- Effect: Allow

Action:

- rds:DescribeDBClusterSnapshots

- rds:DescribeDBClusters

- rds:DescribeDBInstances

- rds:DescribeDBSnapshots

- rds:DescribeExportTasks

- rds:StartExportTask

Resource: "*"

environment:

CONFIG_STAGE: ${self:custom.vars.configStage}

REDEPLOY: "true"

custom:

stage: ${opt:stage, self:provider.stage}

region: ${opt:region, self:provider.region}

default-vars-stage: ppe

vars: ${file(./vars.yml):${opt:stage, self:custom.default-vars-stage}}

version: ${env:BUILD_VERSION, file(package.json):version}

rdsS3ExporterQ: ${self:custom.stage}-rds-s3-exporter

rdsS3ExporterFailedQ: ${self:custom.stage}-rds-s3-exporterFailedQ

databaseSnapshotCreatedTopic: ${self:custom.stage}-database-snapshotCreated

rdsS3ExporterBucket: "${self:custom.stage}-database-snapshot-backups"

functions:

backup:

handler: dist/functions/backup.main

reservedConcurrency: ${self:custom.vars.lambdaReservedConcurrency.backup}

timeout: 55

events:

- sqs:

arn: "arn:aws:sqs:#{AWS::Region}:#{AWS::AccountId}:${self:custom.rdsS3ExporterQ}"

batchSize: 1

environment:

CONFIG_STAGE: ${self:custom.vars.configStage}

DATABASE_BACKUPS_BUCKET: ${self:custom.rdsS3ExporterBucket}

IAM_ROLE: "arn:aws:iam::#{AWS::AccountId}:role/${opt:stage}-rds-s3-exporter-role"

KMS_KEY_ID: alias/lambda

REGION: "eu-west-1"

resources:

Description: Lambda to handle upload database backups to S3 bucket

Resources:

rdsS3ExporterQueue:

Type: AWS::SQS::Queue

Properties:

QueueName: "${self:custom.rdsS3ExporterQ}"

MessageRetentionPeriod: 1209600 # 14 days

RedrivePolicy:

deadLetterTargetArn:

Fn::GetAtt: [ rdsS3ExporterFailedQ, Arn ]

maxReceiveCount: 5

VisibilityTimeout: 60

rdsS3ExporterFailedQ:

Type: "AWS::SQS::Queue"

Properties:

QueueName: "${self:custom.rdsS3ExporterFailedQ}"

MessageRetentionPeriod: 1209600 # 14 days

databaseSnapshotCreatedTopic:

Type: AWS::SNS::Topic

Properties:

TopicName: ${self:custom.databaseSnapshotCreatedTopic}

snapshotCreatedTopicQueueSubscription:

Type: "AWS::SNS::Subscription"

Properties:

TopicArn: arn:aws:sns:#{AWS::Region}:#{AWS::AccountId}:${self:custom.databaseSnapshotCreatedTopic}

Endpoint:

Fn::GetAtt: [ rdsS3ExporterQueue, Arn ]

Protocol: sqs

RawMessageDelivery: true

DependsOn:

- rdsS3ExporterQueue

- databaseSnapshotCreatedTopic

snapshotCreatedRdsTopicSubscription:

Type: "AWS::RDS::EventSubscription"

Properties:

Enabled: true

EventCategories : [ "creation"]

SnsTopicArn : arn:aws:sns:#{AWS::Region}:#{AWS::AccountId}:${self:custom.databaseSnapshotCreatedTopic}

SourceType : "db-snapshot"

DependsOn:

- databaseSnapshotCreatedTopic

rdsS3ExporterQueuePolicy:

Type: AWS::SQS::QueuePolicy

Properties:

Queues:

- Ref: rdsS3ExporterQueue

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal: "*"

Action: [ "sqs:SendMessage" ]

Resource:

Fn::GetAtt: [ rdsS3ExporterQueue, Arn ]

Condition:

ArnEquals:

aws:SourceArn: arn:aws:sns:#{AWS::Region}:#{AWS::AccountId}:${self:custom.databaseSnapshotCreatedTopic}

rdsS3ExporterBucket:

Type: AWS::S3::Bucket

DeletionPolicy: Retain

Properties:

BucketName: ${self:custom.rdsS3ExporterBucket}

AccessControl: Private

VersioningConfiguration:

Status: Enabled

ObjectLockEnabled: true

ObjectLockConfiguration:

ObjectLockEnabled: Enabled

Rule:

DefaultRetention:

Mode: COMPLIANCE

Days: "${self:custom.vars.objectLockRetentionPeriod}"

LifecycleConfiguration:

Rules:

- Id: DeleteObjectAfter31Days

Status: Enabled

ExpirationInDays: ${self:custom.vars.expireInDays}

PublicAccessBlockConfiguration:

BlockPublicAcls: true

BlockPublicPolicy: true

IgnorePublicAcls: true

RestrictPublicBuckets: true

BucketEncryption:

ServerSideEncryptionConfiguration:

- ServerSideEncryptionByDefault:

SSEAlgorithm: AES256

snapshotExportTaskRole:

Type: AWS::IAM::Role

Properties:

RoleName: ${opt:stage}-rds-s3-exporter-role

Path: /

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- rds-export.aws.internal

- export.rds.amazonaws.com

Action:

- "sts:AssumeRole"

Policies:

- PolicyName: ${opt:stage}-rds-s3-exporter-policy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- "s3:PutObject*"

- "s3:ListBucket"

- "s3:GetObject*"

- "s3:DeleteObject*"

- "s3:GetBucketLocation"

Resource:

- "arn:aws:s3:::${self:custom.rdsS3ExporterBucket}"

- "arn:aws:s3:::${self:custom.rdsS3ExporterBucket}/*"

package:

include:

- dist/**

- package.json

exclude:

- "*"

- .?*/**

- src/**

- test/**

- docs/**

- infrastructure/**

- postman/**

- offline/**

- node_modules/.bin/**

Now it’s time to add lambda handler code:

import { Handler } from "aws-lambda";

import { v4 as uuidv4 } from 'uuid';

import middy = require("middy");

import * as AWS from "aws-sdk";

export const processEvent: Handler<any, void> = async (request: any) => {

console.info("rds-s3-exporter.started");

const record = request.Records[0];

const event = JSON.parse(record.body);

const rds = new AWS.RDS({region: process.env.REGION});

await rds.startExportTask({

ExportTaskIdentifier: `database-backup-${uuidv4()}`,

SourceArn: event["Source ARN"],

S3BucketName: process.env.DATABASE_BACKUPS_BUCKET || "",

IamRoleArn: process.env.IAM_ROLE || "",

KmsKeyId: process.env.KMS_KEY_ID || ""

}).promise().then(data =>{

console.info("rds-s3-exporter.status", data);

console.info("rds-s3-exporter.success");

})

};

export const main = middy(processEvent)

.use({

onError: (context, callback) => {

console.error("rds-s3-exporter.error", context.error);

callback(context.error);

}

})

As there is no built-in possibility to create a flexible filter of databases we want to export, it is always possible to add some custom filtering in the lambda execution itself. You could find example of such logic as well as all codebase in GitHub repository.

Right before publication this post I’ve found that AWS actually recently (Oct 2021) has implemented AWS Backup Vault Lock which does the same thing out of the box. You could read more about that at AWS Doc website. Nevertheless, at the time of publication this post, AWS Backup Vault Lock has not been certified by third-party organisations SEC 17a-4(f) and CFTC.